%%html

<style>

@import url('https://fonts.googleapis.com/css?family=Orbitron|Roboto');

body {background-color: #b8e2fc;}

a {color: royalblue; font-family: 'Roboto';}

h1 {color: #191970; font-family: 'Orbitron'; text-shadow: 4px 4px 4px #ccc;}

h2, h3 {color: slategray; font-family: 'Orbitron'; text-shadow: 4px 4px 4px #ccc;}

h4 {color: royalblue; font-family: 'Roboto';}

span {text-shadow: 4px 4px 4px #ccc;}

div.output_prompt, div.output_area pre {color: slategray;}

div.input_prompt, div.output_subarea {color: #191970;}

div.output_stderr pre {background-color: #b8e2fc;}

div.output_stderr {background-color: slategrey;}

</style>

<script>

code_show = true;

function code_display() {

if (code_show) {

$('div.input').each(function(id) {

if (id == 0 || $(this).html().indexOf('hide_code') > -1) {$(this).hide();}

});

$('div.output_prompt').css('opacity', 0);

} else {

$('div.input').each(function(id) {$(this).show();});

$('div.output_prompt').css('opacity', 1);

};

code_show = !code_show;

}

$(document).ready(code_display);

</script>

<form action="javascript: code_display()">

<input style="color: #191970; background: #b8e2fc; opacity: 0.8;" \

type="submit" value="Click to display or hide code cells">

</form>

hide_code = ''

import numpy as np

import pandas as pd

import cv2

import scipy as sp

import scipy.ndimage

import scipy.misc

from scipy.special import expit

import random

from time import time

import os

import sys

import h5py

import tarfile

from six.moves.urllib.request import urlretrieve

from six.moves import cPickle as pickle

import tensorflow as tf

import tensorflow.examples.tutorials.mnist as mnist

from skimage.feature import hog

from sklearn.externals import joblib

from sklearn.neural_network import MLPClassifier, BernoulliRBM

from sklearn import linear_model, datasets, metrics

from sklearn.pipeline import Pipeline

from sklearn import manifold, decomposition, ensemble

from sklearn import discriminant_analysis, random_projection

from sklearn.model_selection import train_test_split

import keras as ks

from keras.models import Sequential, load_model, Model

from keras.preprocessing import sequence

from keras.optimizers import SGD, RMSprop

from keras.layers import Dense, Dropout, LSTM

from keras.layers import Activation, Flatten, Input, BatchNormalization

from keras.layers import Conv1D, MaxPooling1D, Conv2D, MaxPooling2D, GlobalAveragePooling2D

from keras.layers.embeddings import Embedding

from keras.callbacks import ModelCheckpoint

from IPython.display import display, Image, IFrame

import matplotlib.pylab as plt

import matplotlib.cm as cm

from matplotlib import offsetbox

%matplotlib inline

import warnings

warnings.filterwarnings('ignore')

hide_code

def fivedigit_label(label):

size = len(label)

if size >= 5:

return label

else:

num_zeros = np.full((5-size), 10)

return np.array(np.concatenate((num_zeros, label), axis = 0))

def get_filenames(folder):

image_path = os.path.join(folder)

return np.array([f for f in os.listdir(image_path) if f.endswith('.png')])

def get_image(folder, image_file):

filename=os.path.join(folder, image_file)

image = scipy.ndimage.imread(filename, mode='RGB')

if folder == 'new':

n = np.where(new_filenames == image_file)[0]

label = new_labels[n]

image64_1 = scipy.misc.imresize(image, (32, 32, 3))/255

image64_2 = np.dot(np.array(image64_1, dtype='float32'), [0.299, 0.587, 0.114])

return image64_1, image64_2, label

def digit_to_categorical(data):

n = data.shape[1]

data_cat = np.empty([len(data), n, 11])

for i in range(n):

data_cat[:, i] = ks.utils.to_categorical(data[:, i], num_classes=11)

return data_cat

hide_code

def get_image2(folder, image_file, boxes):

filename=os.path.join(folder, image_file)

image = scipy.ndimage.imread(filename, mode='RGB')

box = boxes.loc[image_file]

image = image[box[0]:box[1], box[2]:box[3]]

if folder == 'new':

n = np.where(new_filenames == image_file)[0]

label = new_labels[n]

image32_1 = scipy.misc.imresize(image, (32, 32, 3))/255

image32_2 = np.dot(np.array(image32_1, dtype='float32'), [0.299, 0.587, 0.114])

return image32_1, image32_2, label

def get_image3(folder, image_file, boxes):

filename=os.path.join(folder, image_file)

image = cv2.imread(filename)

box = boxes.loc[image_file]

image = image[box[0]:box[1], box[2]:box[3]]

image_gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

# image_hist = cv2.equalizeHist(image_gray)

image_r = cv2.resize(image_gray,(32, 32), interpolation = cv2.INTER_CUBIC)

if folder == 'new':

n = np.where(new_filenames == image_file)[0]

label = new_labels[n]

return image_r, label

def get_image4(folder, image_file):

filename = os.path.join(folder, image_file)

img = cv2.cvtColor(cv2.imread(filename), cv2.COLOR_BGR2RGB)

img = cv2.GaussianBlur(img, (7, 7), -1)[3:-3, 3:-3]

img_gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

edges = cv2.Canny(img_gray, 100, 200)

# img[edges!=0] = (255, 255, 255)

# img_gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

rectangle = cv2.boundingRect(edges)

n1, n2, n3, n4 = rectangle[1], rectangle[1]+rectangle[3], rectangle[0], rectangle[0]+rectangle[2]

if (n2 == 0): n2 = img.shape[0]

if (n4 == 0): n4 = img.shape[1]

image_box = img_gray[n1:n2, n3:n4]

image_resize = cv2.resize(image_box, (32, 32), interpolation = cv2.INTER_CUBIC)

return image_resize

Experimental Datasets¶

hide_code

new_filenames = get_filenames('new')

print('New files list:\n', new_filenames)

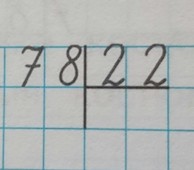

new_labels = [[8], [5, 6, 3], [5, 7], [6], [6, 1, 1],

[8], [5, 9], [1, 0, 1], [1, 0, 0, 0], [1, 9, 1, 3],

[4], [1], [3, 4, 4], [2, 4, 4, 8], [1, 5],

[7], [7, 8, 2, 2], [6, 4, 8], [2], [3, 0],

[3], [4, 3], [2, 0, 1, 0], [7, 8, 3], [1, 0, 1, 1],

[7], [1, 0], [2], [9], [8]]

new_labels = np.array([fivedigit_label(new_labels[i]) for i in range(len(new_labels))])

print('New labels: \n',new_labels)

hide_code

image_example = get_image('new', '30.png')

print("Image size: ", image_example[0].shape)

print("Image label: ", image_example[2])

print('\nExample of image preprocessing')

plt.imshow(image_example[1], cmap=cm.Blues);

hide_code

new_images1 = np.array([get_image('new', x)[0] for x in new_filenames])

new_images2 = np.array([get_image('new', x)[1] for x in new_filenames])

The '.pickle' file¶

hide_code

pickle_file = 'new_digits.pickle'

try:

f = open(pickle_file, 'wb')

save = {'new_images1': new_images1, 'new_images2': new_images2,

'new_labels': new_labels, 'new_filenames': new_filenames}

pickle.dump(save, f, pickle.HIGHEST_PROTOCOL)

f.close()

except Exception as e:

print('Unable to save data to', pickle_file, ':', e)

raise

statinfo = os.stat(pickle_file)

print('Compressed pickle size:', statinfo.st_size)

hide_code

pickle_file = 'new_digits.pickle'

with open(pickle_file, 'rb') as f:

save = pickle.load(f)

new_images1 = save['new_images1']

new_images2 = save['new_images2']

new_labels = save['new_labels']

new_filenames = save['new_filenames']

del save

print('Number of new images: ', len(new_images1))

hide_code

new_labels_cat = digit_to_categorical(new_labels)

print('The sixth dataset')

print("Shape of the features - {}, shape of the target - {}".\

format(new_images2.shape, new_labels_cat.shape))

hide_code

X_new = new_images2.reshape(30, 32, 32, 1)

y_new_cat_list = [new_labels_cat[:, i] for i in range(5)]

hide_code

print ('File: ', new_filenames[23])

print ('Label: ', new_labels[23])

print ('Categorical label: \n', new_labels_cat[23])

print('\nExample of loaded images')

plt.imshow(new_images2[23], cmap=plt.cm.Blues);

The image boxes¶

hide_code

boxes = np.array([[10, 120, 15, 100], [20, 55, 0, 120], [5, 90, 15, 140], [100, 500, 200, 500], [20, 100, 30, 160],

[5, 220, 70, 250], [10, 180, 20, 130], [5, 100, 15, 110], [10, 120, 10, 220], [150, 400, 10, 480],

[30, 150, 0, 100], [70, 220, 80, 210], [130, 250, 80, 300], [10, 60, 5, 155], [40, 80, 60, 110],

[0, 120, 0, 100], [40, 90, 10, 170], [40, 80, 30, 130], [30, 180, 20, 140], [70, 120, 40, 90],

[10, 140, 20, 90], [20, 120, 0, 130], [20, 140, 10, 290], [60, 120, 10, 110], [10, 140, 10, 340],

[20, 150, 20, 130], [10, 170, 20, 190], [10, 230, 20, 170], [10, 240, 200, 400], [10, 140, 20, 150]])

new_boxes = pd.DataFrame(data=boxes, index = new_filenames)

new_boxes.head(7)

hide_code

new_images1_2 = np.array([get_image2('new', x, new_boxes)[0] for x in new_filenames])

new_images2_2 = np.array([get_image2('new', x, new_boxes)[1] for x in new_filenames])

X_new2 = new_images2_2.reshape(30, 32, 32, 1)

hide_code

print ('File: ', new_filenames[1])

print ('Label: ', new_labels[1])

print ('Categorical label: \n', new_labels_cat[1])

print('\nExample of loaded images')

plt.imshow(new_images2_2[1], cmap=plt.cm.Blues);

With image boxes. OpenCV¶

hide_code

new_images2_3 = np.array([get_image3('new', x, new_boxes)[0] for x in new_filenames])

X_new3 = new_images2_3.reshape(30, 32, 32, 1)/255

new_images2_4 = np.array([get_image4('new', x) for x in new_filenames])

X_new4 = new_images2_4.reshape(30, 32, 32, 1)/255

Step 3: Test a Model on Newly-Captured Images¶

Take several pictures of numbers that you find around you (at least five), and run them through your classifier on your computer to produce example results. Alternatively (optionally), you can try using OpenCV / SimpleCV / Pygame to capture live images from a webcam and run those through your classifier.

Load models¶

hide_code

cnn_model = load_model('cnn_model.h5')

cnn_model2 = load_model('cnn_model2.h5')

Predictions without boxes¶

hide_code

y_new_predict = cnn_model.predict(X_new)

y_predict = []

for i in range(30):

for j in range(5):

y_predict.append(np.argmax(y_new_predict[j][i]))

y_predict = np.array(y_predict).reshape(30,5)

print('CNN Model 1. Predictions: ')

print(y_predict)

hide_code

y_new_predict_2 = cnn_model2.predict(X_new)

y_predict_2 = []

for i in range(30):

for j in range(5):

y_predict_2.append(np.argmax(y_new_predict_2[j][i]))

y_predict_2 = np.array(y_predict_2).reshape(30,5)

print('CNN Model 2. Predictions: ')

print(y_predict_2)

hide_code

cnn_scores = cnn_model.evaluate(X_new, y_new_cat_list, verbose=0)

print("CNN Model 1. Scores: \n" , (cnn_scores))

print("CNN Model 1. First digit. Accuracy: %.2f%%" % (cnn_scores[6]*100))

print("CNN Model 1. Second digit. Accuracy: %.2f%%" % (cnn_scores[7]*100))

print("CNN Model 1. Third digit. Accuracy: %.2f%%" % (cnn_scores[8]*100))

print("CNN Model 1. Fourth digit. Accuracy: %.2f%%" % (cnn_scores[9]*100))

print("CNN Model 1. Fifth digit. Accuracy: %.2f%%" % (cnn_scores[10]*100))

hide_code

avg_accuracy = sum([cnn_scores[i] for i in range(6, 11)])/5

print("CNN Model 1. Average Accuracy: %.2f%%" % (avg_accuracy*100))

hide_code

cnn_scores_2 = cnn_model2.evaluate(X_new, y_new_cat_list, verbose=0)

print("CNN Model 2. Scores: \n" , (cnn_scores_2))

print("CNN Model 2. First digit. Accuracy: %.2f%%" % (cnn_scores_2[6]*100))

print("CNN Model 2. Second digit. Accuracy: %.2f%%" % (cnn_scores_2[7]*100))

print("CNN Model 2. Third digit. Accuracy: %.2f%%" % (cnn_scores_2[8]*100))

print("CNN Model 2. Fourth digit. Accuracy: %.2f%%" % (cnn_scores_2[9]*100))

print("CNN Model 2. Fifth digit. Accuracy: %.2f%%" % (cnn_scores_2[10]*100))

hide_code

avg_accuracy_2 = sum([cnn_scores_2[i] for i in range(6, 11)])/5

print("CNN Model 2. Average Accuracy: %.2f%%" % (avg_accuracy_2*100))

Questions and Answers¶

Question 7¶

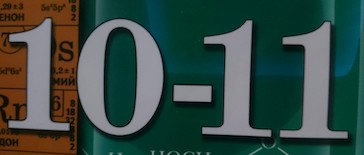

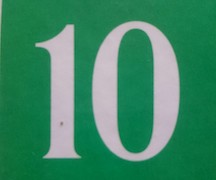

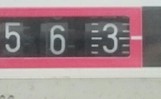

Choose five candidate images of numbers you took from around you and provide them in the report. Are there any particular qualities of the image(s) that might make classification difficult?

Answer 7¶

| "10.png" | "11.png" | "27.png" | "13.png" | "19.png" |

|---|---|---|---|---|

|

|

|

|

|

Here are five candidate images. It's not so easy to recognize digits for some reasons: additional symbols ("-", border lines, strokes, circles, etc.), too stylish fonts, lines and cells as a background.

Optional: Question 9¶

If necessary, provide documentation for how an interface was built for your model to load and classify newly-acquired images.

Answer 9¶

I took the simplest procedures that can be used to process the images and to locate the digits in the photos. They are built with programming libraries 'SciPy' and 'OpenCV'. They do not require special skills in application and documentation.

And the model was also built with a very well-known library 'Keras'. By ease of use and by endless possibilities in creating models, this resource is similar to the set "Lego" for constructing. I do not think that in this case, any special documentation is needed.

Step 4: Explore an Improvement for a Model¶

There are many things you can do once you have the basic classifier in place. One example would be to also localize where the numbers are on the image. The SVHN dataset provides bounding boxes that you can tune to train a localizer. Train a regression loss to the coordinates of the bounding box, and then test it.

Predictions with boxes¶

hide_code

y_new_predict2 = cnn_model.predict(X_new2)

y_predict2 = []

for i in range(30):

for j in range(5):

y_predict2.append(np.argmax(y_new_predict2[j][i]))

y_predict2 = np.array(y_predict2).reshape(30,5)

print('CNN Model 1. Predictions: ')

print(y_predict2)

hide_code

cnn_scores2 = cnn_model.evaluate(X_new2, y_new_cat_list, verbose=0)

print("CNN Model 1. Scores: \n" , (cnn_scores2))

print("CNN Model 1. First digit. Accuracy: %.2f%%" % (cnn_scores2[6]*100))

print("CNN Model 1. Second digit. Accuracy: %.2f%%" % (cnn_scores2[7]*100))

print("CNN Model 1. Third digit. Accuracy: %.2f%%" % (cnn_scores2[8]*100))

print("CNN Model 1. Fourth digit. Accuracy: %.2f%%" % (cnn_scores2[9]*100))

print("CNN Model 1. Fifth digit. Accuracy: %.2f%%" % (cnn_scores2[10]*100))

hide_code

avg_accuracy2 = sum([cnn_scores2[i] for i in range(6, 11)])/5

print("CNN Model 1. Average Accuracy: %.2f%%" % (avg_accuracy2*100))

hide_code

y_new_predict2_2 = cnn_model2.predict(X_new2)

y_predict2_2 = []

for i in range(30):

for j in range(5):

y_predict2_2.append(np.argmax(y_new_predict2_2[j][i]))

y_predict2_2 = np.array(y_predict2_2).reshape(30,5)

print('CNN Model 2. Predictions: ')

print(y_predict2_2)

hide_code

cnn_scores2_2 = cnn_model2.evaluate(X_new2, y_new_cat_list, verbose=0)

print("CNN Model 2. Scores: \n" , (cnn_scores2_2))

print("CNN Model 2. First digit. Accuracy: %.2f%%" % (cnn_scores2_2[6]*100))

print("CNN Model 2. Second digit. Accuracy: %.2f%%" % (cnn_scores2_2[7]*100))

print("CNN Model 2. Third digit. Accuracy: %.2f%%" % (cnn_scores2_2[8]*100))

print("CNN Model 2. Fourth digit. Accuracy: %.2f%%" % (cnn_scores2_2[9]*100))

print("CNN Model 2. Fifth digit. Accuracy: %.2f%%" % (cnn_scores2_2[10]*100))

hide_code

avg_accuracy2_2 = sum([cnn_scores2_2[i] for i in range(6, 11)])/5

print("CNN Model 2. Average Accuracy: %.2f%%" % (avg_accuracy2_2*100))

Individual predictions for images. OpenCV¶

hide_code

image_gray = get_image3('new', '10.png', new_boxes)[0]

print ('File: ', new_filenames[1])

print ('Label: ', new_labels[1])

print ('Categorical label: \n', new_labels_cat[1])

plt.imshow(image_gray, cmap=plt.cm.Blues);

hide_code

image_for_predict = image_gray.reshape(1, 32, 32, 1)/255

y_image_predict = cnn_model.predict(image_for_predict)

y_predict_example = [np.argmax(y) for y in y_image_predict]

print('Predicted label:')

print(y_predict_example)

hide_code

image_gray_2 = get_image4('new', '30.png')

print ('File: ', new_filenames[23])

print ('Label: ', new_labels[23])

print ('Categorical label: \n', new_labels_cat[23])

plt.imshow(image_gray_2, cmap=plt.cm.Blues);

hide_code

image_for_predict_2 = image_gray_2.reshape(1, 32, 32, 1)/255

y_image_predict_2 = cnn_model2.predict(image_for_predict_2)

y_predict_example_2 = [np.argmax(y) for y in y_image_predict_2]

print('Predicted label:')

print(y_predict_example_2)

Predictions with boxes. OpenCV¶

hide_code

image_gray2 = new_images2_4[1]

print ('File: ', new_filenames[1])

print ('Label: ', new_labels[1])

print ('Categorical label: \n', new_labels_cat[1])

plt.imshow(image_gray2, cmap=plt.cm.Blues);

hide_code

image_gray2_2 = new_images2_4[23]

print ('File: ', new_filenames[23])

print ('Label: ', new_labels[23])

print ('Categorical label: \n', new_labels_cat[23])

plt.imshow(image_gray2_2, cmap=plt.cm.Blues);

hide_code

y_new_predict4 = cnn_model.predict(X_new4)

y_predict4 = []

for i in range(30):

for j in range(5):

y_predict4.append(np.argmax(y_new_predict4[j][i]))

y_predict4 = np.array(y_predict4).reshape(30,5)

print('CNN Model 1. Predictions: ')

print(y_predict4)

hide_code

cnn_scores4 = cnn_model.evaluate(X_new4, y_new_cat_list, verbose=0)

print("CNN Model 1. \n")

print("Scores: \n" , (cnn_scores4))

print("First digit. Accuracy: %.2f%%" % (cnn_scores4[6]*100))

print("Second digit. Accuracy: %.2f%%" % (cnn_scores4[7]*100))

print("Third digit. Accuracy: %.2f%%" % (cnn_scores4[8]*100))

print("Fourth digit. Accuracy: %.2f%%" % (cnn_scores4[9]*100))

print("Fifth digit. Accuracy: %.2f%%" % (cnn_scores4[10]*100))

hide_code

avg_accuracy4 = sum([cnn_scores4[i] for i in range(6, 11)])/5

print("CNN Model 1. Average Accuracy: %.2f%%" % (avg_accuracy4*100))

hide_code

y_new_predict4_2 = cnn_model2.predict(X_new4)

y_predict4_2 = []

for i in range(30):

for j in range(5):

y_predict4_2.append(np.argmax(y_new_predict4_2[j][i]))

y_predict4_2 = np.array(y_predict4_2).reshape(30,5)

print('CNN Model 2. Predictions: ')

print(y_predict4_2)

hide_code

cnn_scores4_2 = cnn_model2.evaluate(X_new4, y_new_cat_list, verbose=0)

print("CNN Model 2. \n")

print("CNN Scores: \n" , (cnn_scores4_2))

print("First digit. Accuracy: %.2f%%" % (cnn_scores4_2[6]*100))

print("Second digit. Accuracy: %.2f%%" % (cnn_scores4_2[7]*100))

print("Third digit. Accuracy: %.2f%%" % (cnn_scores4_2[8]*100))

print("Fourth digit. Accuracy: %.2f%%" % (cnn_scores4_2[9]*100))

print("Fifth digit. Accuracy: %.2f%%" % (cnn_scores4_2[10]*100))

hide_code

avg_accuracy4_2 = sum([cnn_scores4_2[i] for i in range(6, 11)])/5

print("CNN Model 2. Average Accuracy: %.2f%%" % (avg_accuracy4_2*100))

Question 10¶

How well does your model localize numbers on the testing set from the realistic dataset? Do your classification results change at all with localization included?

Answer 10¶

On the testing realistic set of localized digits, the model works well enough and on average shows the accuracy of predictions about 95 percents.

I didn't train and test the model on the sets without localization. On my opinion, images include a lot of useless information for digit recognition without localization. This creates unnecessary interference and difficulties for the neural network.

Question 11¶

Test the localization function on the images you captured in Step 3. Does the model accurately calculate a bounding box for the numbers in the images you found? If you did not use a graphical interface, you may need to investigate the bounding boxes by hand. Provide an example of the localization created on a captured image.

Answer 11¶

The model predicts very well for the newly-captured images with bounding boxes constructed by hand but shows about the same accuracy for the programming procedure for finding digits in the photos and for images without bounding boxes at all. It means that I need to improve only the part for cutting image spaces without digits.

I have illustrated this fact by two examples of files.

Step 5: Build an Application or Program for a Model¶

Take your project one step further. If you're interested, look to build an Android application or even a more robust Python program that can interface with input images and display the classified numbers and even the bounding boxes. You can for example try to build an augmented reality app by overlaying your answer on the image like the Word Lens app does.

Loading a TensorFlow model into a camera app on Android is demonstrated in the TensorFlow Android demo app, which you can simply modify.

If you decide to explore this optional route, be sure to document your interface and implementation, along with significant results you find. You can see the additional rubric items that you could be evaluated on by following this link.

hide_code

def digit_to_categorical(data):

n = data.shape[1]

data_cat = np.empty([len(data), n, 11])

for i in range(n):

data_cat[:, i] = ks.utils.to_categorical(data[:, i], num_classes=11)

return data_cat

train_images = pd.read_csv("train_images1.csv")

train_images = np.array(train_images.drop('filename', axis=1))

train_images = train_images.reshape(-1, 32, 32, 3)

train_labels = pd.read_csv("train_labels.csv")

train_labels = np.array(train_labels[["0", "1", "2", "3", "4"]])

train_labels_cat = digit_to_categorical(train_labels)

train_labels_cat_list = [train_labels_cat[:, i] for i in range(5)]

test_images = pd.read_csv("test_images1.csv")

test_images = np.array(test_images.drop('filename', axis=1))

test_images = test_images.reshape(-1, 32, 32, 3)

test_labels = pd.read_csv("test_labels.csv")

test_labels = np.array(test_labels[["0", "1", "2", "3", "4"]])

test_labels_cat = digit_to_categorical(test_labels)

test_labels_cat_list = [test_labels_cat[:, i] for i in range(5)]

hide_code

train_images.shape, test_images.shape, train_labels_cat.shape, test_labels_cat.shape

hide_code

from keras.applications.vgg16 import VGG16

VGG16_model = VGG16(weights='imagenet', include_top=False)

bn_train = VGG16_model.predict(train_images)

bn_test = VGG16_model.predict(test_images)

hide_code

np.save('bn_train.npy', bn_train.reshape(-1, bn_train.shape[3]))

np.save('bn_test.npy', bn_test.reshape(-1, bn_test.shape[3]))

hide_code

bn_train = np.load('bn_train.npy')

bn_test = np.load('bn_test.npy')

bn_train = bn_train.reshape(-1, 1, 1, bn_train.shape[1])

bn_test = bn_test.reshape(-1, 1, 1, bn_test.shape[1])

hide_code

def vgg16_add_model():

model_input = Input(shape=(bn_train.shape[1:]))

x = GlobalAveragePooling2D()(model_input)

x = Dense(1024, activation='relu')(x)

x = Dropout(0.5)(x)

x = Dense(256, activation='relu')(x)

x = Dropout(0.5)(x)

y1 = Dense(11, activation='softmax')(x)

y2 = Dense(11, activation='softmax')(x)

y3 = Dense(11, activation='softmax')(x)

y4 = Dense(11, activation='softmax')(x)

y5 = Dense(11, activation='softmax')(x)

model = Model(input=model_input, output=[y1, y2, y3, y4, y5])

model.compile(loss='categorical_crossentropy', optimizer='nadam', metrics=['accuracy'])

return model

hide_code

vgg16_add_model = vgg16_add_model()

vgg16_checkpointer = ModelCheckpoint(filepath='weights.best.vgg16_add.hdf5',

verbose=2, save_best_only=True)

hide_code

vgg16_add_history = vgg16_add_model.fit(bn_train, train_labels_cat_list,

validation_data=(bn_test, test_labels_cat_list),

epochs=50, batch_size=128,

callbacks=[vgg16_checkpointer], verbose=0);

hide_code

vgg16_add_model.load_weights('weights.best.vgg16_add.hdf5')

vgg16_add_scores = vgg16_add_model.evaluate(bn_test, test_labels_cat_list, verbose=0)

print("VGG16 ADD Model. \n")

print("Scores: \n" , (vgg16_add_scores))

print("First digit. Accuracy: %.2f%%" % (vgg16_add_scores[6]*100))

print("Second digit. Accuracy: %.2f%%" % (vgg16_add_scores[7]*100))

print("Third digit. Accuracy: %.2f%%" % (vgg16_add_scores[8]*100))

print("Fourth digit. Accuracy: %.2f%%" % (vgg16_add_scores[9]*100))

print("Fifth digit. Accuracy: %.2f%%" % (vgg16_add_scores[10]*100))

vgg16_avg_accuracy = sum([vgg16_add_scores[i] for i in range(6, 11)])/5

print("VGG16 Model. Average Accuracy: %.2f%%" % (vgg16_avg_accuracy*100))

filename = os.path.join('new', '6.png')

img = cv2.cvtColor(cv2.imread(filename), cv2.COLOR_BGR2RGB)

img_vgg16 = cv2.resize(img, (32, 32))

print ('File: ', new_filenames[26])

print ('Label: ', new_labels[26])

print ('Categorical label: \n', new_labels_cat[26])

plt.imshow(img_vgg16);

img_vgg16 = img_vgg16.reshape(1,32,32,3)

predict_vgg16 = VGG16_model.predict(img_vgg16)

predict_vgg16_add = vgg16_add_model.predict(predict_vgg16)

predict_label_vgg16 = [np.argmax(y) for y in predict_vgg16_add]

print('Predicted label:')

print(predict_label_vgg16)

Documentation¶

Provide additional documentation sufficient for detailing the implementation of the Android application or Python program for visualizing the classification of numbers in images. It should be clear how the program or application works. Demonstrations should be provided.

hide_code

#